Today’s most valuable brands have a deep understanding of their audience. They leverage this understanding to create more informed and inspired solutions and reduce risk. User research drives priorities and validates the importance of offerings and features. By creating user-centric designs, they are able to optimize workflows and improve user satisfaction.

Digital Transformation is hard. Success requires organizations to:

Experience Research helps organizations to gain a deeper understanding of their audience (internal users, such as employees and external, such as customers). Intevity uses design thinking, a flexible research process, a variety of research methodologies, and a knowledge of best practices to ensure a meaningful outcome.

Organizations need to deeply understand those they serve in order to be able to create effective solutions for them. This understanding is achieved through research that sheds light on audience behaviors, needs, motivations, pain points, and goals.

Research on one’s audience typically includes a variety of internal and external stakeholders such as employees, customers, end-users, market segments, and whole industries. This article focuses on research benefiting end-users, which can represent any type of audience.

Aligning user goals with organizational goals makes it possible to quantify the return of an improved design, create greater buy-in with key business stakeholders, and encourage focus on enhancements that will have the greatest added value to both the user and the organization.

Experience research (how we refer to our overarching discipline for user, customer, and business research) is a set of tools and processes for learning about user’ behaviors, needs, motivations, pain points, and goals. Research is used in every stage of a project lifecycle, beginning with upfront exploratory research all the way through end user validation and iteration/improvement planning.

Experience Research enables a deep understanding of users with the goal of improving adoption rates, increasing user engagement, and reducing error rates. For internal projects (e.g. software upgrade) good research can lead to increased job satisfaction and even improve retention rates.

Time spent upfront on research reduces rework later. It can also lead to increased stakeholder buy-in. Decision makers are heartened by the fact that they have a deeper understanding of users. In fact, one of the common concerns we hear from clients is, “I don’t know what my users want.” Properly defining the problem up front ensures the correct solution is developed. Meanwhile, users’ spirits are buoyed because they finally have someone listening to them.

Conducting research throughout the product lifecycle identifies additional opportunities, unmet needs, and emerging trends. Research creates alignment on expectations. It is focused on answering the five W’s: Who, What, Why, When, and Would. Research is not concerned with answering How; that is answered during subsequent work. However, research can validate whether a particular answer to How (a solution) will likely be received.

Experience Disciplines at a GlanceCustomer Experience Customer Experience (CX) is the understanding and management of the end-to-end relationship an organization has with their customer. CX is larger than UX, as it represents all interactions (the total experience) a customer has with your organization or brand, User Experience User Experience design (UX) zooms in specifically to the interactions a customer or user has with a particular product, website, app or service your organization offers. Business Experience Business Experience (BX) is a lesser used term that refers to a hybrid role (a little business analysis, a little UX, and a little strategy) that improves the experience within a business (processes, business design, etc). Intevity combines these disciplines simply as ‘Experience’ to represent our expertise for all three. |

Innovation, especially when it enables you to do more with less, is the ultimate business advantage. In our experience, we’ve found that design thinking is one methodology that helps drive innovation and innovative-like thinking. Many have started to think of it as synonymous for experience research. It develops empathy, transcends innate biases, and evolves to better sustainable solutions.

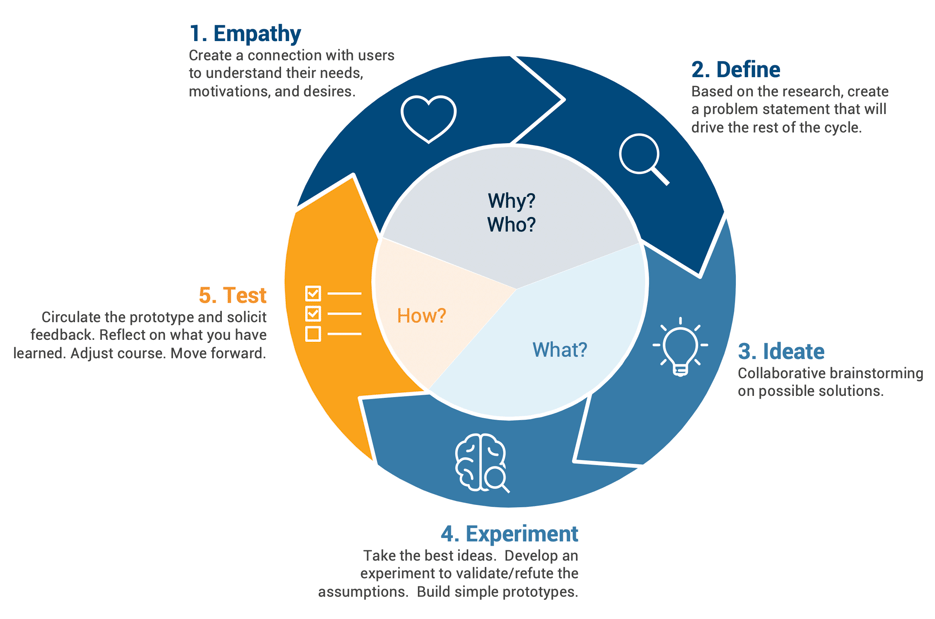

Design Thinking is a collaborative framework used by designers to solve complex problems by putting humans, and their needs, at the forefront. It is solution-focused and action-oriented.

Another way of looking at Design Thinking is that it ensures the problems you solve are the right ones and that your technology improves the lives of those that use it rather than frustrate them.

In Design Thinking, research is a critical ingredient at every step in the process:

Instead of just converting a paper form to a digital one, we used Experience Research combined with Design Thinking to help us create a new human-centric solution for managing workers’ compensation claims. Putting customers first resulted in a solution that had both high adoption rates and superior user satisfaction scores.

Intevity worked with a large-scale e-commerce and cloud provider to introduce Design Thinking principles. These were shared throughout their organization and helped re-envision their sales process in order to quickly build trust with customers through incremental value both early and often.

Intevity worked with a large financial services provider to create a mobile-first compliance solution. By applying Design Thinking principles to that development build, we enabled the company to achieve internal compliance on submissions for all of their employees on the first try.

The research process can be summarized as an iterative three-step process:

The first step of planning is to identify objectives. Common objectives for research are:

After objectives have been identified, the specific research methods should be evaluated. One should consider both qualitative and quantitative research to ensure a well rounded study. Beyond the types of research objectives, the relevant research methods will depend upon several factors including:

Based on the answers to these questions, the available research methods can be examined. The Nielsen Norman Group has published a useful guide on matching research methods across the dimensions of:

NOTE: In practice, while many organizations would like to include a significant quantitative aspect to their research, they often lack the resources necessary to do so.

The Perils of Inside-Out ThinkingNaysayers of research often focus on the expense and time required to do upfront research. They also often question the need for research with statements similar to, “Why do we need to talk to the users? We know more about this topic than they do. Just go build it.” In the product management realm, this is known as Inside-Out, or Inside-Outside thinking. Inside-Outside thinking is typified by focusing solely on improving internal operational efficiencies or finding ways of repackaging or recombining existing tools in different ways in the hope that it will yield a breakthrough product. Blockbuster Video’s failure to understand the underlying emotions (build empathy) of their customers ultimately led to their eventual bankruptcy. Meanwhile, Netflix was able to tap into those emotions to create a product that more strongly resonated with customers. |

Prioritization is important during the planning process. In large complex systems with highly diverse stakeholder groups it may be necessary to break them into multiple tiers based on level of impact or the degree of influence they have on a system. Often, during the initial Experience Research phase, only the top tier users and the most critical influencers will be researched. Secondary and tertiary tiers may not be addressed until much later.

Once the objectives, methods, and users have been identified, a lightweight plan should be documented along with the high-level research questions to be asked. In large-scale projects and programs, this plan should be integrated with the overall project plan— especially the communication management and stakeholder management plans.

Conducting research is often an iterative process. In many cases, the answers to one set of questions lead to more questions. It takes practice to discern which questions are essential to answer during Experience Research and those that can wait until later. A few key points to remember when conducting research:

One of the biggest challenge researchers face is obtaining enough participation. Keep in mind, when conducting interviews and usability tests, between five to 10 participants is often enough for each stakeholder group. After that many interviews, responses tend to harmonize, and the insights gleaned from additional interviews or observational studies begin to decrease significantly. If responses are highly divergent, it may be necessary to re-evaluate how stakeholder groups are broken down. For example, there may be a subtype of user.

When possible, record sessions. Be sure to understand the policies, procedures, and relevant laws prior to recording; always be sure to obtain written consent from people beforehand. Recordings allow other project team members to obtain a better, or potentially different, understanding of the responses along with enabling the researcher to focus fully on the conversation instead of taking notes.

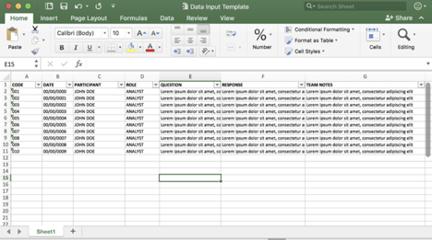

After completing a session, documenting and coding the session is essential. Coding is the process of organizing research and responses in a meaningful way. This may take many different forms, but it is often done in a spreadsheet. It is a method to organize and begin the synthesis process by homogenizing the data to begin recognizing patterns and trends. For example, in addition to the raw notes, it would be useful to categorize emotional responses on a three- or five-point scale. It also helps with sorting data later. Tally marks and prioritization matrices are additional examples of coding.

Some of the artifacts that may be produced during this stage are:

At its core, synthesis is the process of analyzing the research to look for patterns, unexpected connections, and the root causes of user behaviors, frustrations, and needs.

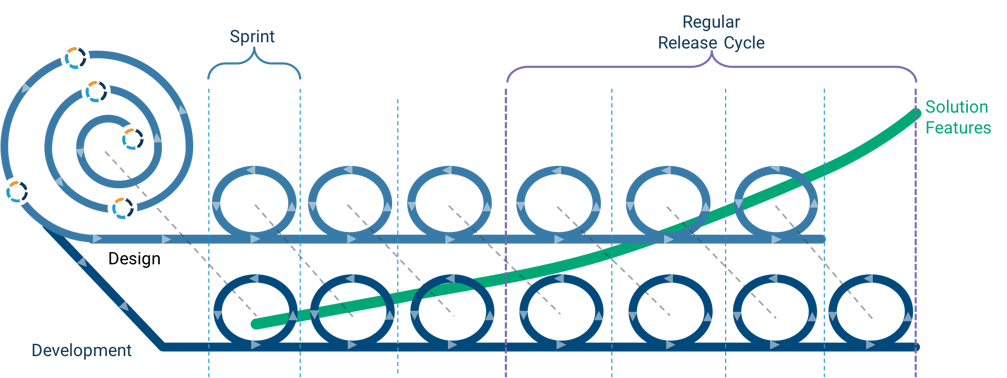

Often times synthesis will raise additional questions, and several passes may be required to arrive at the real answers to define the mission, strategy, and tactics for the initiative or product. This fits with the models of design thinking, lean, and iterative product development.

Synthesis within the overall context of Experience Research often requires additional research and design activities to validate before formal development begins. When synthesis is done in the context of targeted, ongoing research, limited validation or small-scale validation may be required.

For example, in a multi-month Experience Research effort, it may be necessary to conduct a follow-on design or prototyping phase to validate the proposed approach coming out of synthesis. However, in the context of a Design Sprint, validation may be as simple as running a usability test on a paper prototype during final day of the sprint.

The outcomes and deliverables coming out of synthesis is dependent on what type of experience research is being performed, or where in the experience research process synthesis is taking place. The table below outlines the outcomes and the examples of potential deliverables by experience research type:

Experience Research Type |

Desired Outcomes |

Potential Tactics and Artifacts |

|

Mission Development |

Alignment on:

|

Artifacts may include:

|

|

Strategic Planning |

Alignment on:

|

Artifacts may include:

|

|

Tactical Planning |

Definition of:

|

Artifacts may include:

|

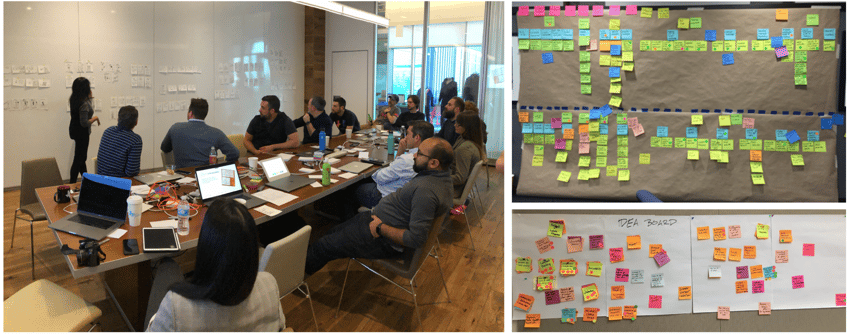

Stakeholder workshops allow collaborative brainstorming and prioritizing of feature sets based on what the business believes are the highest priority to pursue and would generate the most value.

Used to decrease risk and rework strategies by developing detailed user stories and acceptance criteria in collaboration with the business. User stories are plain language, detailed descriptions of a desired system functionality that are written from the user's perspective. They are a convenient way of expressing business needs to various audiences. When done in a collaborative workshop with organization stakeholders, stakeholders are able to articulate exactly what functionality they are looking for and even sketch out ideas.

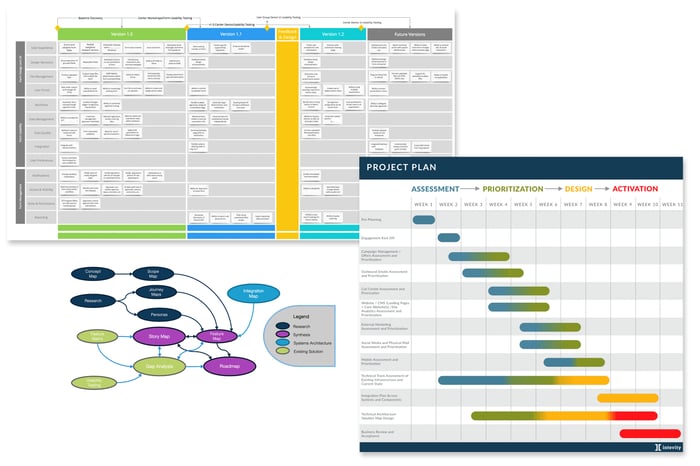

To help illustrate scope and sequencing, Epics (large feature sets used in agile software development) are aggregated in a Maturity Matrix. We often use the MoSCoW method to help stakeholders prioritize functionality. The Maturity Matrix shows how developed that functionality will be over time, starting with the current state.

Personas are fictional composite characters created as a part of experience research. They represent different user types by expressing their major needs and expectations. Personas represent real people, real behaviors, and are used to help prioritize features. The personas represent a memorable cast of characters that team members and stakeholders can use to focus conversations on what the solution needs to achieve.

Personas are typically separated into two or more tiers. The tiers represent the relative weighting of the impact that the persona should have on the solution.

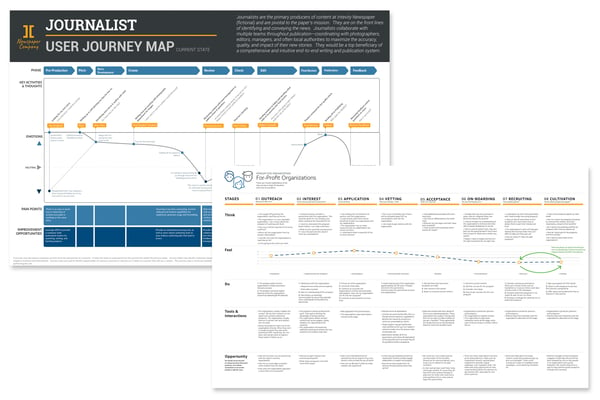

Journey maps document a business process from the perspective of a persona. It helps the team to understand how the persona fits within today's process. Journey Maps help identify motivators (positive emotions and interactions) and demotivators (negative emotions and interactions). Journey maps are used to identify opportunities to improve a process or solution as it relates to a particular persona's experience.

Roadmaps bring all of our research and definitions into a single track that outlines implementation for planning purposes.

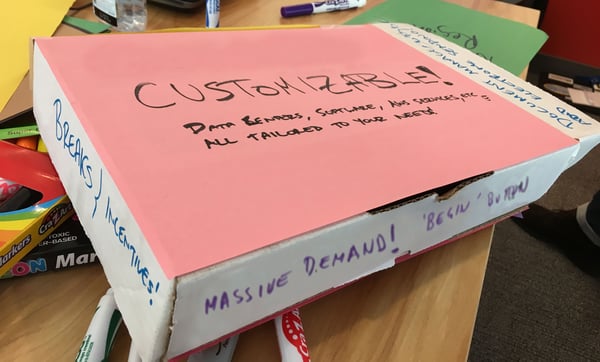

Mock product boxes created with stakeholders during a workshop help to understand what product the business wants customers to buy and the problems customers are trying to solve with it.

A visual diagram showing the hierarchical linking of pages within a website. The visual indicates how pages branch out from each other using lines to show connection.

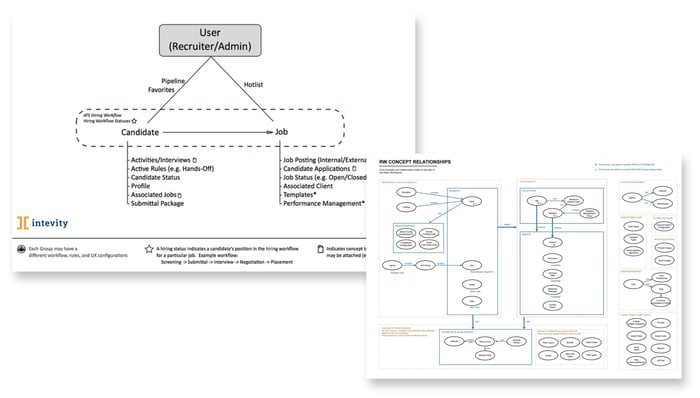

Graphical representation of how key organizational concepts relate to each other.

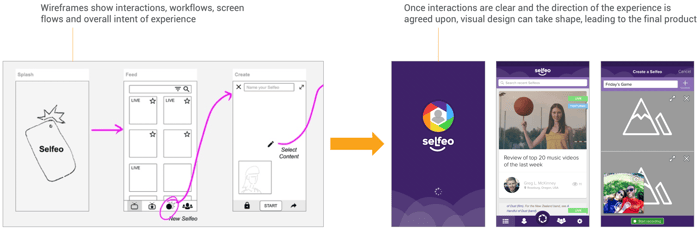

Low fidelity, concept driven visuals focused on interaction give way to better finished products. These exercises combine user experience design and user interface design.

The following tips, tricks, and traps will help guide your research efforts.

|

The Role of Data in Research Analytics on both big and small data can be useful tools when planning research. Data can be used to help identify areas of concern within an existing system. For example, instrumenting a website with an analytics tool may identify thrash (areas of user confusion or unnecessary complexity), unused portions of a website, or unmet needs. |

Want more of the latest insights from Intevity? Since 2003, we have been delivering world-class service to Fortune 500 companies across Financial Services, Insurance, Retail, and Technology Industries, as well as Federal Government Agencies. Subscribe to stay up to date on the insights we uncover through these complex, multi-disciplinary experiences.